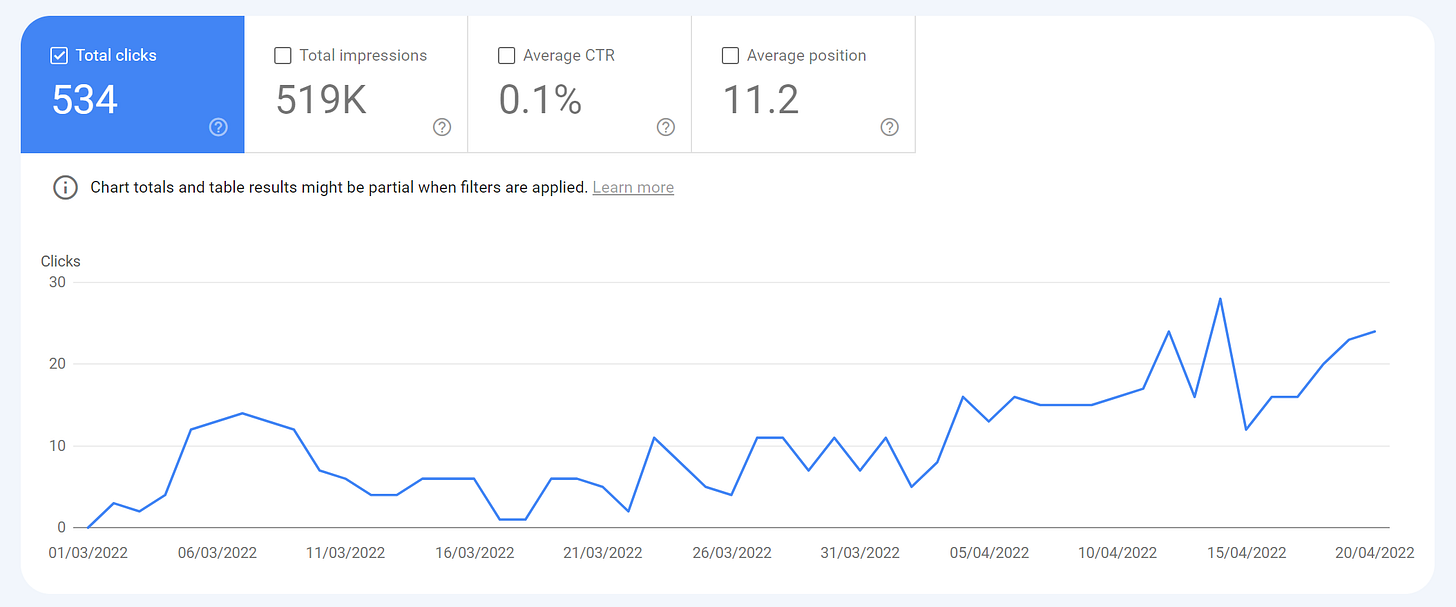

Case Study: From 0 to 400K+ Clicks in 4 Months

So in December one of my clients contacted me to work on their project. It was a niche on which I have worked before. So I took it up.

And in less than 4 months we went from 0 to 400K+ Clicks, getting almost 300K Monthly clicks now.

I will be dividing what all I did in 3 parts -

Found a Gold Mine

Testing GPT-3 Content

Other SEO Implementations

I won’t be revealing the website name for obvious reasons.

Found a Gold Mine

So this technique may not be useful to many but it is so interesting that I couldn’t resist sharing it.

Few years ago when I was working on one project within the same niche of my client, I found one website getting good traffic (in millions).

So after getting this project I thought of rechecking on that website, while I was doing competitor analysis.

And guess what? That website was no longer indexed in Google/Bing! It was live, It has all content right there. But it was not getting any traffic.

I don’t know why it was deindexed, my wild guess ins Google penalized them for building spammy backlinks or violating some guidelines, and they never cared to solve that problem.

So the next thought that came in my mind was - How about I scrape this whole website and push all the content on my clients website?

First question - Will Google consider it as plagiarised content? I think No. Because content is no longer in the Google’s index, and it has been more than a year that it has been deindexed.

So I thought of experimenting with it. Luckily, it was a WordPress website, and my client was using WordPress too.

Now a very few know this, but you can access the content of any WordPress website in JSON format using WordPress REST API.

So, for example the following API URL will give me all - posts, category ID, slug of all the posts on the website -

https://domain.com/wp-json/wp/v2/posts?status=publish&page=1&per_page=100&_fields=slug,author,categories,date,modifiedThere are many such parameters you can use to get any data you want.

AND you can use WordPress API to publish content too.

So, we wrote a script to - Take all the content from the competitor website and push it directly to our Clients website!

I used Indexing API tool to boost indexing rate a litte.

Result? I got lucky, Google didn’t detect plagiarism and we got 150K Clicks in just 3 months! We published around 4000 content in January, and it is still growing everyday.

So, now all we need is the list of all those websites which is deindexed from Google and owner is too busy to give it a priority. How to get that list? I don’t know yet, Comment below if you can come up with something.

2. Testing GPT-3 Content

I have been seeing a lot of websites testing GPT-3 content and generating good amount of traffic.

Even Google recently updated their guidelines adding - “Automatically generated content intended to manipulate search rankings” is against best practices.

I am sure they do not have any system implemented yet to fight GPT-3/AI Generated Content. But I think it will be a very high priority problem for them, and Google will definitely come up with some algo update soon for this.

I did ask John Mu about this, but I did not get any clear answer.

So, we went ahead to see what content we can create using GPT-3 on scale.

Challenge was to find a pattern of keywords for e.g. Write few lines on [any topic name]. So we found a pattern with around 400 topics in it, but it has very low Click Per Search, hence low CTR.

We recently published 400 pages, and we gained 500+ clicks so far, it is still growing. I am going to experiment more with some high volume keywords and see it how it goes.

3. Other SEO Implementations

Rest of the traffic we are getting is from traditional SEO practices.

Following are the things I did in order -

Building a topic map - I did a lot of research and competitor analysis, and made a huge content library. Every topic/sub-topic was properly mapped to Primary category, Sub-Category, and primary keyword. This technique also aligns with Semantic SEO and covering as much entities as possible. Goal was to gain the topical authority in that niche.

We divided all content in priority bucket according to - Volume and Difficulty.

We created a content-brief/template for each category.

Next step was to hire a team of content writers and train them through our content-brief.

Since, client wanted to go very fast we skipped the part of Content Editing and Optimization and pushed all the content in bulk as soon as possible.

Building Internal Links - We made sure to link all the content to each other very well. Using related content section and also inside the content.

My client already had couple of other websites in same niche with good domain rating. So I pushed relevant content on those websites and built 10-20 backlinks from there.

For some categories, we also scraped some websites content and re-wrote them, because the content ranking in top 10 result were very similar.

In 3 months we pushed more than 6000 pieces of content. Strategy was to target high amount of low-volume/difficulty topics, and once we gained the authority we pushed high-volume/high-difficulty topics.

Now the next steps are to - optimize all the content for better ranking and user experience, improve the site structure, solve all the technical issues, improve page speed, implement schemas, and much more.

So, that is how we went from 0 to 410K in just 4 months -

Hope you got some interesting insights from this.

Thanks for reading!

Sharing is caring!

Nice post, thanks!