Recently one of my clients came up with a request, that they wanted to know how many pages are indexed on Google for the list of websites they have.

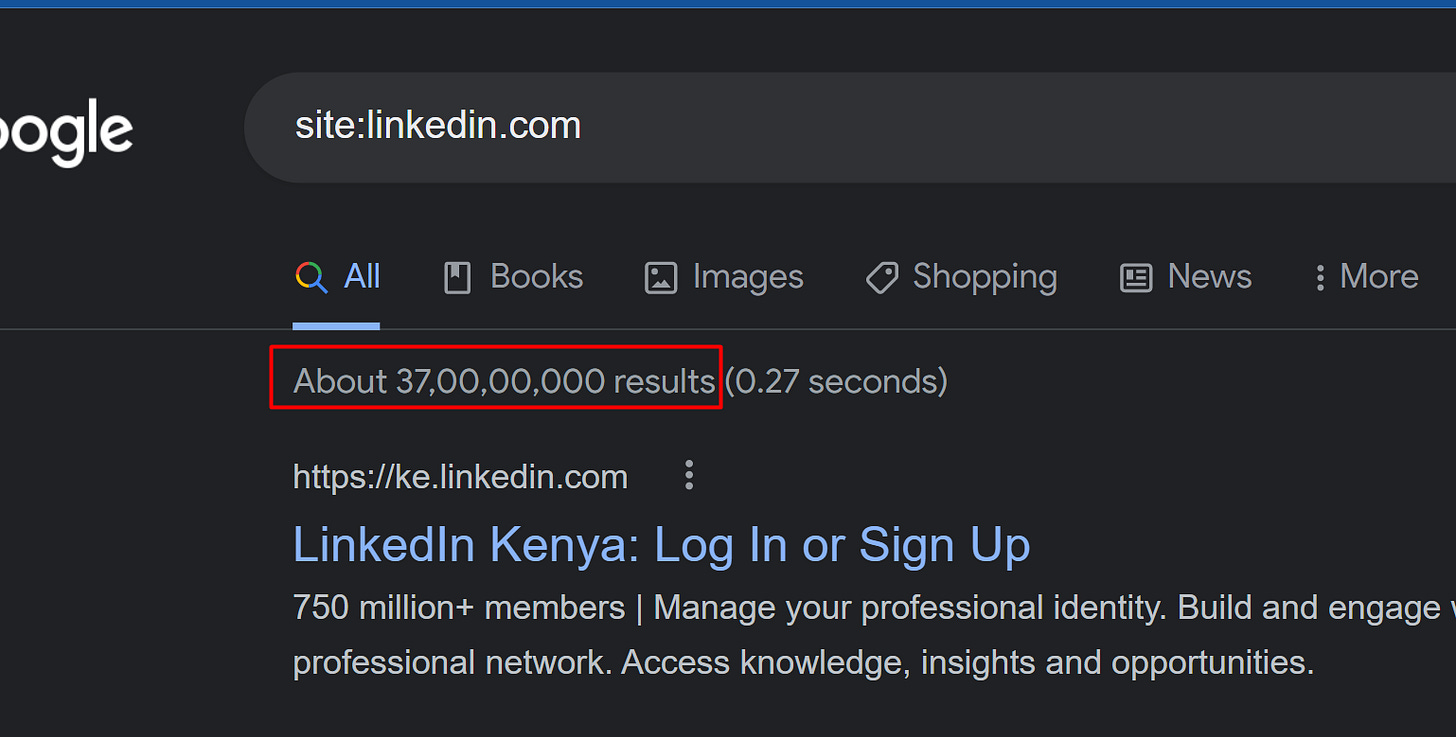

Usually, anyone quickly checks using the “site:example.com” operator on Google.

But here the challenge was the client had a list of 100+ websites.

The goal was to understand which website is the smallest with a good amount of traffic. That may give us an idea of where are the low-hanging fruits.

Now that’s where SERP API helps!

What is SERP API? SERP API is an API that helps you scrape anything from Google results. Literally, anything! Here are things you can fetch from SERP API -

How to do it?

First, you need to sign up on SERP API and get your API Key. The first 100 searches are free.

And then you only need the below script to scrape the number of google search results.

Let’s understand the code.

Importing Libraries.

from serpapi import GoogleSearch

import pandas as pdMake a list of all the queries you would search for on Google using the site operator.

And then declare 2 lists, the number of pages, and site names so that we can keep on appending our data to these lists.

queries = ['site:example1.com', 'site:example2.com'] #add all the queries here.

nb_pages = []

site_name = []Write a for loop to search for these queries and get a JSON output from SERP API.

Since SERP API gives output as a nested dictionary you need to fetch the exact values you want.

Keep on appending the data to the lists we made above. Once you have everything in the list just create a Panda data frame out of it and save it as CSV.

for i in queries:

params ={

"engine": "google",

"q": i,

"api_key": "Enter Your SERP API Key here"

}

search = GoogleSearch(params)

results = search.get_dict()

# organic_results = results["organic_results"]

search_results = results.get('search_information', {}).get('total_results')

query = results.get('search_information', {}).get('query_displayed')

nb_pages.append(str(search_results))

site_name.append(str(query))

print(str(search_results) + ' ' + str(query))

df_pages = pd.DataFrame(nb_pages)

df_sites = pd.DataFrame(site_name)That’s it. You will get a list of a number of search results for each query.

There are a lot of other cool things you can do from SERP API. You can sign up and also play in their playground - https://serpapi.com/playground.

Hope this helped. Thanks for reading. Sharing is caring!